Introduction

Most organizations and are deploying new applications and technologies at a high rate and without a means to adequately assess them prior to implementation, it’s difficult to accurately gauge your organization’s risk. No matter what the size or industry, it’s imperative that an organization has a standardized and repeatable process for assessing the security of the IT solutions it implements. My goal with today’s post is to provide some recommendations on how an organization can design and implement such a program.

Although I’ve worked closely with consultants over the years, I’ve worked as an internal organizational security professional for my entire career so the content is primarily aimed at those responsible for implementing a security assessment program within their own organization. Internal organizational security staff often have unique requirements and perspectives (vs external consultants) such as understanding the roles of the various business units and developing processes to meet those specific needs as well as having a good understanding of the risk/threats specific to their organization or industry. They also have to consider how to incorporate security requirements into existing business processes, handle last-minute assessment requests to meet operational needs, and perform recurring reassessments of changing technologies within their environment. I’ll cover some of these in more detail throughout this post.

Why “Assessment”?

I purposely used the word “assessment” vs “audit” or “penetration test” because (right or wrong), these other terms often come with a predefined notion of what they entail. For example, the word audit brings to mind checklists and documentation review, with much emphasis on policy content and process-based controls. Important? Yes. The primary focus of a security assessment? No. While I certainly don’t ignore process-based controls, my security assessments are typically 80-85% technical/hands-on testing.

So why not call it a penetration test? Because often penetration tests have the implied (narrower) scope of testing a network/system/application with the sole purpose of finding that one way to compromise the target. Is that wrong? Not at all. Nearly every one of my security assessments involve a penetration test. But you can’t stop there. Just because you find a SQL injection vulnerability that ultimately leads to getting root on the server, doesn’t mean you should ignore the other problems with authentication, session management or access control. For me, an assessment must take a comprehensive look at all security controls -otherwise you might plug one hole but leave several others untouched.

I don’t want to give the impression that every audit or every penetration test is so narrowly scoped. I also know that the word “assessment” sometimes has its own pre-disposed connotations (i.e. “paperwork drill”, which I assure you is not at all what I’m going to talk about here). Frankly, you can call it anything you want – just be sure that you aren’t scoping too narrowly.

So what do I consider to be the scope of a security assessment? I define an assessment as a comprehensive analysis and testing of the technical, procedural, and physical security controls implemented or inherited by an application or system. It involves documentation review, interviews, and most importantly, hands-on testing of the controls to ensure they are implemented sufficiently.

Also, I frequently use the terms “application” and “IT solution” throughout this post but the true scope (devices, systems, etc) is really up to you. I test just about everything using this same approach – web applications, client software, mobile devices, and any other device that can be networked (from bar-code scanners to cash registers). The specific controls tested between IT solutions may vary, but the approach is very much the same.

In the sections that follow, I’ll outline some recommendations on how to develop an assessment program. Here’s some links for quick navigation:

- Defining the need – the “Why”

- Getting the right people – the “Who”

- Defining the scope – the “What”

- Developing the assessment procedures – The “How”

- Determining the timing and frequency – The “When”

- Assessment Deliverables

- Tracking vulnerabilities and mitigation actions

- The Assessment Process

- Conclusion

Defining the need – the “Why”

It may sound pretty obvious but you should identify the reason(s) why you’re implementing an assessment program. What are you trying to accomplish and how will you measure those goals? Though I shudder to hear it, sometimes the driving factor is compliance with a regulatory requirement. While it’s dangerous to base your security solely on compliance policies, sometimes these requirements can serve as a good means to obtain the resources you need to implement a quality security assessment program.

Getting the right people – the “Who”

This is by far one of the most important steps. Skilled information security professionals are required for any successful security assessment program. As I’ll outline in the sections that follow, a quality security assessment program is largely hands-on, technical testing. If you’re only reviewing questionnaires, or performing documentation reviews, you’re missing the point.

You’re probably going to be faced with assessing a wide variety of technologies and will need people that understand web, mobile, Windows, Unix, databases, etc. You may discover highly experienced and skilled individuals that can apply their knowledge to testing just about anything or you may have to find people with specific skill sets.

Obviously budget can dictate the number of personnel you can hire but it can also dictate the quality (highly skilled, experienced security professionals don’t come cheap!). If you’re a skilled penetration tester then you have a good idea what it takes and will probably have no problem hiring others with similar skill sets. If you’re not, you may consider outsourcing the hiring process or putting together an internal team of technical resources to assist in finding the right people. I’m not saying everyone has to have 10+ years of experience but you might consider options like using a team approach on some assessments so that more experienced staff can guide and train others. Like any other job function, it’s important to have a training and development program to foster an environment of continuous learning and help ensure skill-sets remain current.

Defining the scope – the “What”

When you develop a security assessment program you need to determine the scope. What types of IT solutions are you going to assess and what security controls are you going to assess for each solution?

What solutions are you going to assess?

It’s important to define what types of IT solutions you’re going to be testing. Applications? Mobile devices? Medical devices? Industrial Control Systems? New deployments? Existing solutions? Internal or externally-facing applications? Depending on available resources you may decide to start by limiting your focus, with plans to expand over time. This portion of the scope is often dictated by available manpower and expertise. Do you have the necessary expertise in-house or will you have to outsource? If you have the expertise, how many assessments can you perform in a given week/month given the number of personnel?

Budget and lead time are also factors. Outsourcing an assessment can be expensive ($20,000 – $50,000+ per application), but so is hiring quality, full-time people. As I’ll cover later, thorough assessments take time and planning (to set up test accounts, provide the necessary access/connectivity, etc). Outsourcing may add to this lead time so if your assessments are largely last-minute, “fire-drills”, you may find outsourcing difficult. A good assessment program relies on standardized processes, planning, and scheduling.

Outside of available resources, another factor to consider when choosing which IT solutions to assess is the level of risk they introduce to your environment. If your resources are limited and you can only perform a certain number of assessments in a given period, it’s wise to focus on those that present the most risk. Externally facing systems might be a good start, though extremely critical internal systems may also require testing. Everything poses some level of risk, but being able to prioritize is important when your resources are limited. This requires a good understanding of your environment (i.e. an accurate system/application inventory).

What security controls are you going to assess for each solution?

Once you determine which types of solutions you plan to test, you need to determine the scope of each assessment. In other words, you’ve decided to test all newly deployed, public-facing web applications – but what does that mean? For me, it’s about taking a comprehensive look at the security controls implemented in any given system and determining their applicability. You may consider:

- Authentication – management of user credentials, user login, password reset, etc

- Session Management – transmission encryption, session tokens, session timeout, etc

- Access Control – user account provisioning and termination processes, restricted user account access, resource access control

- Auditing – log content, protection of logs, log review, etc

- Injection – SQLi, code injection, etc

- Cross Site Scripting (XSS)

- Cross Site Request Forgery (CSRF)

- Media and Data Protection – data at rest encryption, backup tape management, etc

- Host System Security and Vulnerability Management – vulnerability scanning, patch management, etc

- Incident Response, Continuity and Disaster Recovery

- Physical Security – CCTV, badge access, visitor control, etc

For each broad category, you’re going to need to identify the specific controls you want to assess. Some of these controls have a procedural element, some have a technical element, and some have both. For example, when you’re considering access control, it’s incredibly important to test the technical controls used to restrict access between similar users (lateral) and user types (vertical). It’s also important to understand the processes surrounding user account are provisioning, management and termination. Are users sharing accounts? How do application administrators know when to terminate a user’s account and how soon is that accomplished? When you’re deploying a new application some of these process-based controls may not have an immediate impact on its security, but a year or two from now, when there are 100’s of terminated users with active accounts, you may have a problem! These are things that an internal security team need to consider.

So how do you determine which controls to include in your assessment scope? Like it or not, with most organizations there’s going to be a compliance element. Are you beholden or SOX, HIPAA, PCI, etc? If so, I suggest you understand these requirements and incorporate them into your assessment process. You may consider creating a matrix of regulatory requirements mapped to individual security controls so you can clearly see how the assessment program will help you demonstrate compliance. Industry standards bodies such as NIST can also be used as a resource. The NIST security control set is published under Special Publication 800-53 (with supplemental assessment procedures provided in SP 800-53A). You could use these documents when determining which controls you want to assess (both at an application as well as an enterprise level). You may also turn to resources such as OWASP or other publications such as The Web Application Hacker’s Handbook. Finally, personal experience/expertise may help you determine which security controls you want to assess. I’ve used all of these resources when developing my assessment programs.

Scope vs. Applicability

Just because you consider a set of security controls to be in scope, doesn’t mean they will always be applicable to every IT solution you assess. If it’s a simple web application hosting publicly-consumable data with no login functionality, then the authentication portion of your testing will be limited. However, it’s important to standardize the controls you want to consider for every solution and let the individual application determine their applicability.

Another driver of scope (or at least priority) is risk. You probably are not going to have the same assessment scope for an internal application that has no user authentication or account management, processes no sensitive data, and is not considered critical to business operations as you would an externally-facing, web application that processes sensitive employee HR data that requires 24/7 availability. Again, when you have limited resources, it’s important to prioritize your assessment efforts and risk can be a major factor.

Inheritance

While this post is focused on assessing the security of individual applications/systems, an important concept to understand when developing a security assessment program is security control inheritance. In a typical organization, you’re probably going to have several centralized IT/security solutions as well as one or more designated hosting/data centers. You’re going to want to assess these up-front and understand the security they afford to the individual applications or systems that rely on them. For example, let’s say in a given month your organization deploys six new client-server applications, with the clients being installed on a standard desktop image and the server portions installed in the designated data center. You’re certainly not going to review the GPOs implemented on the standard desktop image each time, nor are you going to conduct a physical penetration test on the data center each time – but you better know how these enterprise controls are implemented because each of those applications are inheriting security from them.

My suggestion is to take a look at your organization and determine which controls are implemented at an enterprise level. Technical solutions such as Active Directory, single sign-on, or multi-factor authentication that are going to be used by multiple applications need to be assessed, documented, and understood. Yes, you will still need to test each application’s authentication controls to ensure they are adequately implemented, but a vulnerability in an enterprise solution is inherited by each application that relies upon it.

Determining which controls should be assessed at an enterprise level can save you time and effort and addressing security vulnerabilities in these enterprise-level solutions can benefit all of the applications that rely on them.

Developing the assessment procedures – The “How”

Once you’ve determined the security control scope of your assessment, you need to determine how you’re going to assess each control. Some are going to be interview-based, some will require documentation review, and some will require hands-on testing. Let’s take another look at Access Control:

Part of controlling access to an application typically involves a process to provision/enable user accounts. To understand the account provisioning process, you’re probably going to have to talk with the application administrator to understand several things:

- How are users granted access? Is there a request process initiated by the user? How does it work?

- Is there a review process before the account is activated?

- Are there different “levels” of users and if so, what are they, how do their roles/permissions and respective access to resources differ?

- What is the technical process for adding a user to the application?

- How is user access monitored? Are there regular reviews by a designated administrator?

- How is access revoked? Is it triggered as a result of a job role change? User termination?

You may also want to review any related procedural documentation (business processes, technical guides, etc). Once you have access to test the application, you’re going to want to test exactly how access control is technically enforced:

- Modify a user id parameter to see if you can gain horizontal or vertical privilege escalation.

- Modify a resource access parameters (user or document ids) to see if the application is vulnerable to insecure direct object referencing.

- Determine if resource access control can be circumvented by path manipulation (i.e., directory traversal attacks).

- Etc.

For each control that you want to assess, you should have a list of items you want to test and corresponding test procedures. This ensures that you are implementing a standardized and repeatable process. Take for example, Access Control…

| Access Control | ||

|---|---|---|

| Account Provisioning and Management Process | ||

| Test Procedure | Type | Result |

| 1. Determine how users request application accounts how those accounts are managed and how/when access is revoked …etc… | Interview/Doc Review | Spoke with Application Administrator (John Smith) on 1/5/13. Users request accounts by… |

| Resource Access Control | ||

| Test Procedure | Type | Result |

| 1. For any client-server request where the username or id is passed as a parameter attempt to change it to access resources for another user …etc… | Technical Test | By manipulating the user id in the following URL I was able to access the account of another user … |

The level of detail for these test procedures is up to you. You can go to the nth degree and try to document every single step, injection string, etc but I personally find that to be a futile effort. Applications are like snowflakes – every one is different and security testing is as much of an art as it is a science. Most experienced testers won’t need a detailed script to follow for each assessment. Plus, this isn’t some checklist-based approach that you can hand to just anyone to execute. It takes skilled professionals and every assessment requires significant critical thinking. That said, having some level of standardized procedures helps in several ways:

- It ensures your process is repeatable – you don’t want to re-invent the wheel each time you perform an assessment. You also want work products to hand to newly hired security professionals so they understand the scope approach of your test program. An up-front investment in developing this standardized process will save you time and effort in the long term.

- It helps ensure consistent results – If everyone isn’t testing for the same vulnerabilities, assessments can vary wildly. Of course, discovered vulnerabilities will largely depend on the skill level of the tester which is why hiring good personnel and implementing training programs is a must.

- It helps ensure consistent customer experience – unfortunately, when you’re an internal security professional many “customers” of your required security assessment program don’t view them as a value-added step (it competes with deadlines, resources, etc), so you need to demonstrate why it’s necessary and valuable. Nothing can hurt your case more than an inconsistent process where a customer doesn’t know what to expect from assessment to assessment. A large part of this is developing standardized deliverables, which I’ll cover shortly.

Another component of the “How” is what tools to use. If you’re not providing your team access to the necessary tools, you’re limiting their capability and productivity. These might include large commercial code and vulnerability scanners, as well as low-cost or open source tools. Over the years I’ve found my favorites (nmap, Metasploit, Burp, dirb, IDA, Immunity, etc) and I’ve written and incorporated many custom scripts. Even if your budget is minimal, you can obtain the tools you need to have an effective assessment program. Of course, the users of these tools must have adequate experience and training. Again, it’s not as simple as handing someone a tool and a process guide and saying “Go”. You have to have quality people!

I could easily write a lengthy, technically-focused post on testing tools and methodologies but I’m keeping this process-focused so I’ll leave it at this for now.

Determining the timing and frequency – The “When”

Now that you know what you want to assess and how you want to assess it, you need to figure out when the assessments will be performed. For newly deployed applications, the first thing you want to do is figure out how early you need to be involved in the process before the application is ready for implementation. I highly recommend you get involved as early as possible.

For internally-developed applications, this could be as early as the requirements phase, to ensure your security requirements are represented in the subsequent design and coding phases. Assuming you have a hands-on testing phase in your assessment process (and you should!) you’ll also want to ensure adequate time has been allocated in the software development schedule (with additional time for mitigation and retesting). A couple of suggestions here – first you’ll want to codify your requirements at a high level in policy, but also in more detail in some sort of standard or secure development guide. Providing as much detail to the developers as early as possible can help ensure a securely designed product (vs the typical bolted-on security after the fact). Second, you may consider any tools that can empower your developers to test for security themselves. One example could be a code scanning solution.

For 3rd-party applications, while you may not be able to influence design and development, you still need to be involved as early as possible, preferably before any money exchanges hands (at which point you may lose any leverage you previously had). You’ll want to ensure that those responsible for procuring and managing the application are aware of your security requirements and that the vendor can confirm that their product will meet them. Again, having these requirements documented and readily available can go a long way. If possible (and I almost always insist) you’ll also want to allocate time in advance for hands-on testing of the application (more on that later).

Even if you’re confident that you’ve inserted yourself as early in the processes as possible, I still recommend implementing some additional “triggers” at various other points in the application deployment process. For example, if the application is to be deployed in a centrally-managed data center, ensure the respective server administrators are also aware of the security assessment requirement and have a means to verify it has been accomplished. The same could apply for firewall port requests and user account creation. For third-party applications, ensure those responsible for contract approvals and procurement are aware of the assessment requirement as well. The more triggers you have in place, the more likely you are to catch new applications that may have otherwise slipped through the cracks.

Security assessments are part of an application’s lifecycle, and unless the application is never updated, the environment in which it operates remains static, and the risk to the application or its data doesn’t change, you’ll probably want to implement a process to re-assess applications on a periodic basis. For this, the driving factor may be any significant change to the application or the data which it processes. This often requires software upgrades, changes to existing contracts, and/or additional funding so your existing process triggers may be sufficient.

If you have no shortage of resources you may also decide to assess existing legacy applications. If so, I recommend you apply some risk-based approach to prioritizing which applications are assessed first. Even if you don’t have the resources immediately, if possible, I recommend at least developing an internal vulnerability scanning program to assess your potential risks for all applications.

Assessment Deliverables

Every assessment should result in a deliverable, which is typically an assessment report. I usually construct my reports as follows:

- Section I: Basic Info

- Overview

- Scope

- Methodology

- Assumptions

- Section II: Summary of results

- Overall Risk Score

- Summary of Findings

- Section III: Assessment / Finding Details

- Authentication

- Session Management

- Access Control, etc

- Appendices

Let’s take a brief look at each.

Overview, Scope Methodology, Assumptions

This first section of the report provides a brief overview of the assessment – name of the application, names of the assessors, dates of the assessment, criticality of the application, sensitivity of the data it processes, and exposure (public vs. internal only). It also covers the extremely important assessment scope. I’ll cover this again in a bit, but I can’t emphasize enough how important it is to have all parties involved agree upon the assessment scope – what it does and does not cover, in terms of both application components as well as security controls assessed. This is very important for any hands-on testing that you will be performing, especially if it’s being performed against another organization’s infrastructure. In this section I also outline the methodology (approach and tools used) to provide the reader an understanding of how the assessment was performed. Lastly, I like to codify any assumptions or limitations. This includes the fact that this point-in-time assessment can in no way guarantee that all vulnerabilities were identified and any changes to application scope or design may require additional testing. I try to keep this first section limited to a page in length (no more than two).

Summary of Results

Section two of the report is a summary of the assessment results which includes the overall risk score as well as a summary of any identified findings/vulnerabilities. For the findings, I usually use a simple table format such as:

| Findings | ||

|---|---|---|

| Finding Description | Risk Score | Remediation Status |

| The application is vulnerable to… | High | Open |

You may add additional details but ideally this whole section will comprise no more than a single page that can provide the reader (possibly someone in senior management) a quick means of identifying the major risks uncovered during this assessment. Save the technical details for the next section!

Developing a risk scoring approach

In order to assign risk to a discovered finding/vulnerability you’ll need to adopt some sort of risk scoring methodology. I’ve seen a number of risk models, ranging from extremely simple to extremely complex. For me, there are a couple of factors to consider when developing your risk model:

- Not all vulnerabilities are created equal. A reflected XSS vulnerability on an post-authentication page on an internal application used by two people is probably not going to warrant the same risk score as a persistent XSS vulnerability located on in the comments module of an unauthenticated, public facing application. And yet, some risk models or automated scanners will assign the same level of risk to each. You have to consider all of the pertinent factors (environment, data sensitivity, system criticality, etc) and apply some critical thinking when assigning risk.

- Risk score calculations can’t be too complicated. I’ve seen 3rd party solutions that go way overboard…like assigning a risk score of Medium corresponding to a value of 1354.45 based on 34 distinct factors. My thought is if you can’t easily explain the scoring methodology to a non-technical, non-security person, it’s too complicated.

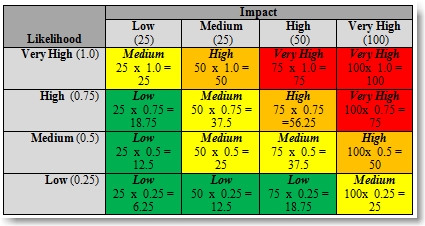

I’ve personally adopted the approach that risk is a product of Likelihood and Impact (R = L x I).

Likelihood

When it comes to Likelihood of a particular finding/vulnerability being exploited I tend to consider Means, Motivation, Opportunity, and the Mitigation provided by existing controls. First, what is the means required for an attacker to exploit the vulnerability? Does it require specialized equipment, extreme skill, etc? Second, what would be the motivation? Is the information gained by exploiting the vulnerability very valuable (PHI, PII, financial, strategic, etc)? Third, what is the opportunity for exploit? Is the application public facing? Does it require access to a valid user account? Are there other vulnerabilities in this application (or others) that could be used to help exploit this one? Finally, what additional controls (if any) exist that might provide some mitigation to this vulnerability?

It’s also important to define and consider potential threats and threat actors when determining your Likelihood score as these can certainly influence your overall risk.

Impact

When it comes to impact, the driving factors for me are the criticality of the application and/or its data, the affect it may have on other systems/applications, the regulatory implications, the reputational harm it could have on the organization, and the harm it could do to individual users.

You may choose to assign numerical values to each of the factors that determine Likelihood and Impact and have a simple formula to determine the resulting risk score or you may choose to develop some more granular definitions for each and use a more subjective means to assign the score. Regardless, I always include a written justification along with my assigned ratings so the customer has a good idea why I assigned it the level of risk I did.

I personally like to use a four-tiered scoring approach for both likelihood and impact, ranging from Low to Very High. For example, if I discover a SQL injection vulnerability that is easy to detect and exploit on a publicly accessible search function of a public-facing system that would provide access to very valuable sensitive data with no additional mitigating controls, I might assign a Likelihood score of Very High. If that same vulnerability can be used to circumvent controls typically provided by authentication and access control and provide direct access to sensitive data as well as Administrator access to the underlying system, which in turn, puts other, extremely critical, systems at risk, I might also assign it an Impact score of Very High.

Since in this example, Risk is a product of Impact and Likelihood you might use something like the following to determine your overall vulnerability risk score (which in the above example would naturally be “Very High”):

There’s nothing magical or proprietary about this approach. You can do a quick Google search and find dozens of examples just like this. You may find this to be too qualitative or simplistic and decide to incorporate additional quantitative scoring criteria or add additional factors in your overall risk score. You may also choose to add or remove additional risk levels (very low, critical, etc). Either way, it’s important that you make your scoring approach fully transparent and easy for your customers to understand, especially if it’s going to drive their own decision making.

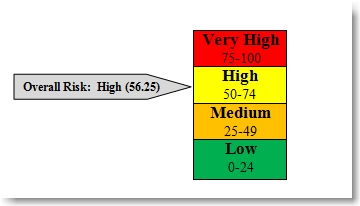

Once you’ve documented all of your vulnerabilities and assigned each a risk score, you have to determine how you’re going to assign a corresponding risk score to the overall application. Naturally you’re going to want to consider each vulnerability when determining this score. You could choose to numerically weight the various vulnerabilities (1 x Low, 2 x Medium, 3 x High, etc) but you have to consider scenarios where an application has 30 Low vulnerabilities and 1 High. Are the 30 Low vulnerabilities any more risky than if there were say 10 or 5 and should they cumulatively rate higher than the single High risk finding? Probably not.

I prefer to use the most simplistic approach – the highest individual vulnerability drives the overall risk rating for the application. For example, if you’ve identified 1 High vulnerability, 5 Mediums, and 2 Lows, your overall risk score is going to be High. If you remediate the High vulnerability, the risk score then reduces to Medium (until all Medium vulnerabilities are remediated at which time it further reduces to Low). This helps drive remediation prioritization and makes it very simple for the customer to understand.

If you want to read more on risk assessment and risk scoring I recommend taking a look at NIST SP 800-30 Rev. 1 (http://csrc.nist.gov/publications/PubsSPs.html).

Assessment / Finding Details

This section of the report should be written with the technical audience (i.e. developers) in mind and include a detailed walk-through of the testing results. Each security control category (access control, authentication, etc) deemed to be within scope should be represented in this portion of the report. I like to organize my assessment details section by these categories.

For example, for Authentication, I provide a high level overview of how the authentication process works (based on the information I gathered during the assessment). I might cover how the login process works as well as any additional authentication-related functions (such as forgot-password or user account registration). I typically provide screenshots and enough information that anyone reading the report can get a good understanding of exactly how all of the authentication functionality is implemented. This may seem overkill, especially from the perspective of a third-party consultant that may never see an application again after their testing engagement. However, if you’re part of an internal organizational security office, there’s a good chance you’ll need to retest the application again in the future (and you’ll be glad you recorded these details when that time comes).

Also, as an internal security office, it’s important you leverage other organizational departments (and vice versa). For example, Internal Audit departments frequently assess process-based controls such as user account management. They may be able to re-use some of your assessment information rather than gathering it all again. You might even do the same by reviewing audit reports (assuming they’ve audited the application/system you’re testing). You might also be able to use the Audit Department to follow-up on the remediation of any process-based findings you uncovered during your assessment. This can save you from having to assess (or re-assess) some of these controls and allow you to focus on what I consider to be the real value-add of a security assessment – the hands-on, technical testing. Believe it or not, this information re-use can also really help your cause when it comes to building a positive relationship with your internal organizational customers. Think about it…if the Internal Audit department just completed a month-long audit of a department’s application inventory and you come along two weeks later asking for some of the same information, you’re probably not going to get a great reception!

That leads me to one more point – try to avoid duplication of effort between other departments. If you find that process-based security controls are adequately reviewed by your Audit department, you may simply defer to them for that portion of your assessment. If the value-added comes from your technical expertise, then focus on that!

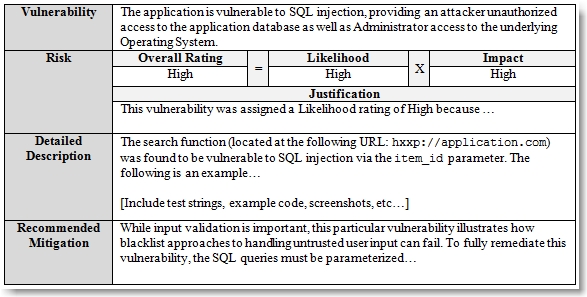

After providing an overview of how a control/function (i.e. authentication) is implemented, you should detail each vulnerability discovered. I use a format similar to the following:

When I develop a high-level vulnerability description, I like to include the impact to make it easy for the reader to quickly understand what it is and why it’s bad.

The application was found to be vulnerable to SQL injection, which allowed for unauthorized access to sensitive financial data and the complete compromise of the underlying system as well as other systems on the organizational network.

Reading that should give even a non-technical reader the understanding of what level of risk a finding presents.

The detailed description of a finding is also extremely important. This should be a complete walk-though of how you discovered the vulnerability, how you exploited it, and what you were able to access/do as a result. It should include screenshots, example links, and any scripts/code used. These detailed descriptions are going to make up the bulk of your assessment report and can be several pages long. They should be detailed enough so that another security professional or developer can reproduce your issues given the information you provide. I often include supplementary videos to demonstrate the exploit in action. Accurately demonstrating how a vulnerability can work and what risk it poses is critical to getting the necessary resources applied for remediation.

I also always include a recommended mitigation section. Unless I have complete access to the underlying source code, I never make assumptions on exactly what the cause may be (though it’s often evident). I do however, provide general guidance and requirements on how to fix a given vulnerability (input validation, output encoding, parameterized queries, etc) as well as links to pertinent resources (MSDN, OWASP, etc).

One other thing that I often include in the detailed section of my assessment reports is an example of how multiple vulnerabilities can be used together to exploit the application and possibly other systems on the network. Can a CSRF vulnerability be used to target an otherwise protected function that was found to be vulnerable to stored XSS or SQLi and how can those subsequent vulnerabilities be used to exfiltrate data or exploit other systems? You want both the technical and non-technical recipients of your report to have that “Oh, I get it” moment when trying to understand why you assigned the risk rating you did.

Appendices

I usually also include one or two appendices with any supplementary information such as code/scripts that were too long to fit into the assessment details section. I also always include an appendix that outlines the definitions and formulas used in the risk scoring methodology for full transparency.

Tracking vulnerabilities and mitigation actions

After a vulnerability is identified, it should be tracked until either the risk is accepted or the necessary mitigation steps have been implemented. Depending on the size of your organization and the number of assessments you perform, this can become extremely cumbersome to do without some form of automation. Trust me, if you’re performing a new assessment every 7-10 days, you’ll quickly forget the vulnerabilities discovered in the months prior and you don’t want to lose sight of open mitigation items.

You might consider using an existing tool at your disposal (such as SharePoint), developing your own, or investing in an Enterprise GRC product. Before you do, I recommend identifying what your requirements and goals are: are you using these vulnerabilities to drive larger Enterprise initiatives? Do you need to slice and dice the data and generate ad-hoc reports? Will executives or other departments require dashboard-style access?

You’ll also want to implement some regular review process to ensure open vulnerabilities are being mitigated on schedule.

The Assessment Process

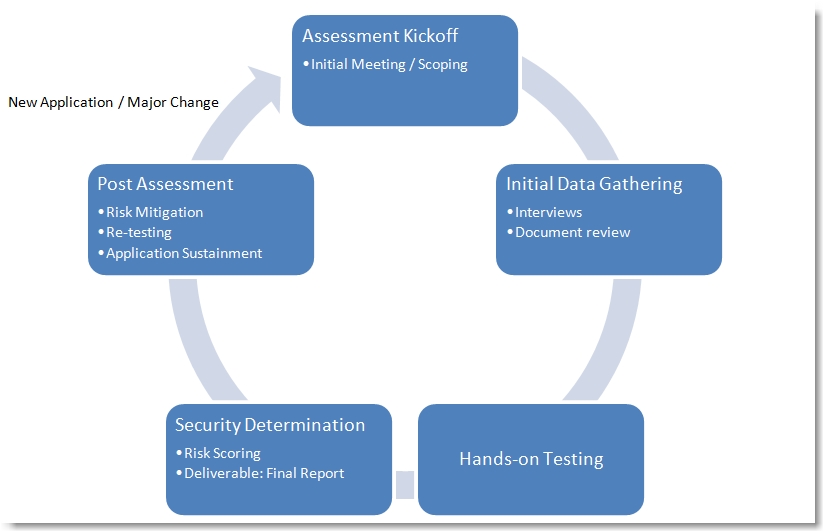

Ok, so you know what applications/systems you want to assess, you’ve identified the security control you’re going to assess, you’ve documented your assessment procedures, obtained the necessary tools and a qualified staff, and developed your final report deliverable template. You’re now ready to perform an assessment…but what should a typical assessment entail?

Here’s a very basic, high-level view of how I conceptualize an assessment:

Assessment Kickoff

Starting from the point when the requirement for an assessment is identified, this first thing I typically do is have a meeting with the appropriate stakeholders – application owner, application administrators (if different), developers, and/or third party vendors. During this meeting I:

- outline the organizational security requirements (providing any pertinent supporting documentation such as policies and guides),

- discuss overall security assessment process and what it will entail, and

- agree upon an assessment scope (to help determine the necessary time and resources).

Depending on where you are in the application/system lifecycle, it may be too early to go any further at this point. However, if possible, you may also want to try to schedule your hands-on testing period. My office is typically booked weeks/months in advance and most third-party assessors require more than just a couple of days of lead time, so the sooner the better!

Initial Data Gathering

If this is a third party application that’s already developed and being procured, I try to accomplish the bulk of the interview portion of my assessment during the initial call as well. I typically walk through each security control category (authentication, session management, access control, etc) and ask the relevant questions of the 3rd-party technical resources. Some people choose to accomplish this process solely via written questionnaires. I’m not a fan of this approach and prefer an interview-style approach for several reasons.

- First, written questions are always open to interpretation. For example, for session management, you might ask how the application protects session cookies and receive a response of “not applicable”. A subsequent follow-up email exchange reveals that the vendor claims they do not use “session cookies” but instead use “authentication tokens”. By the time you get that straightened out you realize you could have resolved it with a 30 second phone exchange.

- Second, it’s difficult to develop questions with the right level of detail. Too little detail (yes/no questions) and the information gathered is of little use. Too much detail and you get objections for it being too complex.

- Third, it’s difficult to accurately reflect question logic in a questionnaire. For example, if they answer “Yes” to a question asking if they implement a remote access solution, you might have 5 other pertinent questions that would otherwise not have to be answered. Without some web-based tool to handle this logic for you, a “paper” based questionnaire in Excel or Word can quickly get out of hand.

When you have the right people on the phone or in person, you can adjust the detail and direction of your questions on the fly and save a lot of assumptions, time, and effort. One thing I will suggest is that if you do bypass the written questionnaire for an in-person or over-the-phone interview, be sure to sum up the discussion in writing and get written confirmation from all necessary parties agreeing upon the collected information and derived conclusions about the implemented security controls.

A couple additional notes about questionnaires and interviews in general.

- First, sometimes they are all you have at your disposal to assess a control. For example, if a vendor is hosting your application, the execution of certain controls, such as security of the backup tapes, is completely under their control. You can outline and agree upon your requirements and obtain written/contractual confirmation that they will be met, but unless you can 1) visit the vendor, 2) contract a third party to conduct an on-site assessment or 3) review an existing third-party assessment, you may have to trust that the vendor has implemented the controls as stated. That being said, you might be surprised at the level of detail you uncover about the implementation of security controls simply by asking some pointed questions.

- Second, if you can test a control, do so, but don’t avoid asking some questions up-front. Even when I have a scheduled hands-on test, I almost always ask the developer or vendor how they are protecting against such things as XSS or SQL injection attacks. Their verbal responses are often indicators that there may be something wrong. For example, if they’ve never heard of the terms “input validation”, “output encoding”, or “parameterized queries”, I’m either talking to the wrong people or there’s a good chance I’m going to find some problems when it comes time to test.

Sometimes the initial kickoff meeting and security control interviews have to be broken up into more than one call/meeting, but I try to make it as few as possible. These meetings often involve the vendor, developer, or application owner providing additional, supplemental documentation (or if you’re lucky, source code) for review. This not only supports the fact that a security control is properly implemented but can prove to be very useful reference materials in your hands-on testing phase. One key to these initial calls/meetings is to ensure the right people are involved. You don’t want the marketing person to be the one fielding the technical questions about how role based access is implemented and enforced.

Hands-on Testing

Once the up-front data collection is completed, it’s time to perform the hands-on testing, which will be the primary value-added portion of your assessment program. I’ve lost track of the number of times I’ve heard “we definitely protect against [fill in the blank vulnerability]” only to discover it during my test phase. I test dozens of products each year and it’s rare that I don’t discover at least one vulnerability (many of which can pose significant risk). Trust me, just because your told the application is used by all of the Fortune 100’s or that “everyone” in your industry is using the product with no complaints, doesn’t mean you shouldn’t test it before implementation! I’ve found vulnerabilities in major commercial products that would have otherwise gone unnoticed if hands-on testing was not a primary focus.

As a result, this phase constitutes the bulk of most of my assessments, both in time and effort, and it’s important to plan properly. Before you perform any testing, you must ensure that you’ve documented the intended scope and agreed upon time frame and received written confirmation from all necessary parties that this information is acceptable and you have approval to test.

You’re also going to need to arrange for the necessary access. This often requires standing up a test instance of the application, whitelisting IP addresses, configuring networking devices, creating test user accounts (representing all of the applicable user types),etc. This can require some additional lead time so you’ll need to plan in advance.

When it comes to scheduling, I usually allot 7-10 business days per test. This gives me time to familiarize myself with how the application works, perform the testing, and generate the report.This is a very conservative timeline and may need to be lengthened depending on the size and scope of the test. The first 1-2 days are usually spent familiarizing myself with the application. This may include navigating the application, spidering to discover content, and monitoring each request to identify the parameters passed from client to server, the content of each, and the expected behavior. Once I have an idea of what the application does, I spend the next 4-7 days testing for vulnerabilities. I document each finding as I go, recording my test results, taking screenshots, etc. A good portion of my final report is composed during this time. I always validate that my results are repeatable and ensure the supporting documentation is clear enough for someone else to replicate. The last 1-2 days are spent writing/cleaning up the final report before distribution.

Again, I could easily write an entire post (or more) on the testing process but I’ll save that for another time.

Security Determination

This last portion of the assessment I usually consider to be the “security determination” phase of the process. During this time I’m assessing the discovered vulnerabilities and assigning the appropriate risk scores, which ultimately decides the overall risk score assigned to the application or system.

I usually like to distribute the final report to any internal organizational stakeholders first to discuss the vulnerabilities and the mitigation plan before including external/3rd party vendors. When sharing your report with external parties, be sure to scrub it of any sensitive data that you don’t need to disclose. Even with the necessary contracts and agreements in place, I often remove internal IP addresses and other info if it has no consequence to the external party understanding how to replicate the vulnerability.

Sometimes findings may result in immediate acceptance of risk (though it’s important to identify who has the authority to accept that risk and document it accordingly). Otherwise, it’s important to agree upon and document a planned remediation/mitigation action with the necessary parties, to include implementation timelines and, where appropriate, resources required. Once that’s done you’ll want to track them until they’re closed (as discussed earlier).

Post-Assessment

You’ll likely need to retest the application after the mitigations have been put in place. To preserve your sanity and manage your schedule effectively, I recommend you not agree to any testing until all fixes are in place (or at least certain milestones are met). If you’ve discovered 20 vulnerabilities in an application, you don’t want 20 distinct retest events. I also recommend that you allot time on your testing calendar for these retests – I typically allow 1-3 days, depending on the scope. If you don’t plan for that, you’ll quickly find other assessments being impacted as a result.

After retesting, I typically like to modify the original report (with additional sections added to each vulnerability write-up detailing the results of the retest effort). Again, you should provide the same level of detail that you provided from the initial test. Any adjustment to risk scores as a result of the implemented mitigation actions should also be reflected in the updated report and re-distributed to all stakeholders.

Eventually an application is going to be put into production, either before or after all remediation actions have been taken. Sometimes the residual risk may be limited to the business unit deploying the application (such as a threat to system availability) and other times the application may pose a security risk to the larger environment and other systems that operate within it. In either scenario, it’s important to identify who has the authorization to accept whatever level of risk has been identified to that point.

Conclusion

I hope this post provided some useful information for anyone considering starting (or improving upon) an internal security assessment program within their organization. To recap, here are a few high-level points:

- As Marcus Lemonis might say, you must have quality people, processes, and product – failure in any areas will be detrimental to your success

- When developing your assessment program, define the why, who, what, how, and when

- Why – Why do you need an assessment program (driving factors and goals)?

- Who – Who is going to make up your assessment team and what skills will they require?

- What – What is the scope (what systems to assess and what controls to test)?

- How – How will you test the security controls, what tools will you need, etc?

- When – When will you perform these assessments (timing, frequency, etc)

- A security assessment isn’t a documentation drill – if you’re not performing hands-on testing, you’re not accurately assessing security/risk

- Adopt a risk scoring model that is meaningful and easy to understand

- Consider your target audience when developing your deliverable(s). The report should provide the summary needed for executive management to make risk-based decisions as well as the details needed by the technical staff responsible for implementing mitigation actions.

Until next time,

– Mike

Security Sift

Security Sift